James Tauber's Blog 2008

blog >

Marking for Deletion in Django

Often you want to give the user the ability to delete objects but enable them to recover them if they change their mind.

One way to do this is to have a boolean flag on the model. The problem with this is

- you have to have the flag on all associated models involved in the object graph to be deleted

- you have to then filter all your queries based on that boolean flag (error prone and inefficient)

An alternative is to have a separate model for the deleted objects. i.e. DeletedFoo alongside Foo, DeletedBar alongside Bar. The schemas are similar (although some foreign keys are to their deleted counterparts instead) and objects are moved from one table to another when deleted (or undeleted).

This has certain advantages over the first approach, but does mean you have to create twice as many models.

A third approach which just occurred to me is to serialize the object graph to be deleted and store that in the database in a single table row. With Django's existing ability to calculate object graphs for deletion and also to load and dump json, this may be fairly straightforward to implement in a generic fashion.

Pinax is currently using the first approach but I'm interested if people have tried the third approach or secret option number four I don't know about yet.

by : Created on Dec. 19, 2008 : Last modified Dec. 19, 2008 : (permalink)

Screencasting Competition Reminder

Firstly, a reminder that there are now just under two weeks to go until the deadline for the First Pinax Screencasting Competition.

I've added a new feature to the site to let you register your interest in participating in the contest, even if you're not yet ready to submit.

If you think you might be making a submission, I strongly recommend you register interest. This will let us gauge whether we have enough people and also lets us contact you (assuming you confirm an email address) in case things change.

by : Created on Dec. 2, 2008 : Last modified Dec. 2, 2008 : (permalink)

Blog Month Over

Well, I did it! I managed to do at least a post a day for the whole of November.

And I also achieved a goal I had that I didn't mention in advance: I wanted to do 50 posts.

I hope you at least enjoyed some of the blog posts.

I will soon finish off the final part of the Voronoi canvas demo and also the song project, but you'll forgive me if I take it easy. Attempting to do 50 posts was actually a lot more stressful and time consuming than I thought it would be.

That said, 30 days is enough to build a habit. I may find it hard NOT to post regularly now. At least I won't feel I HAVE to, though :-)

UPDATE: BTW, any novelists reading make their 50k for NaNoWriMo?

by : Created on Nov. 30, 2008 : Last modified Nov. 30, 2008 : (permalink)

More Thoughts on a New Language

In Thoughts on a New Language I said:

This relates to the whole concept of the "dictionary created by executing a block of statements". I find this notion of a "block of statements" being a first-class object appealing. Imagine a function that, instead of having a return value, simply returns its bound local variables. I guess Python modules are pretty much that. But I'm thinking of the notion within a file.

There are actually three places where Python uses a block of statements to populate a namespace:

- modules

- function bodies

- class definitions

But imagine that it was a first class object. I'm not really thinking about syntax yet, but one could imagine

{ x = 5 y = x + 2 }

or

block: x = 5 y = x + 2

as possibilities for anonymous blocks. To bind the block to a particular variable, we could therefore say:

foo = { x = 5 y = x + 2 }

or

block foo: x = 5 y = x + 2

Now, what if following on from the previous post and ideas from prototype-based languages like Self, Javascript and Lua you could specify a parent to be traversed in case the block didn't have the requested value. Would there be special syntax for specifying the parent or would it just be a value in the block like __parent__?

foo = { __parent__ = bar y = x + 2 }

or

block foo(bar): y = x + 2

In either case, would the evaluation of y be lazy or not?

What about multiple inheritance, which isn't commonly found in prototype-based languages?

Also, would we want to have the notion of parameterized blocks? With the free variable explicit:

foo(x) = { y = x + 2 }

or implicit:

foo = { y = x + 2 }

?

And would you then want to be able to bind that free variable at a later date:

foo % { x = 5 }

assert foo.y == 7

(just to arbitrarily pick % to use as the operator symbol)

Also, taking a cue from an offhand remark in Steve Yegge's Universal Design Pattern post about this sort of stuff ( HT: Jonathan Hartley) imagine you have a monster objects like

orc = { hp = 10 } warg = { hp = 5 }

and you wanted to capture the notion that a boss monster has 2 times the hit points of a normal monster of that prototype. Would you use inheritance without binding the __parent__ yet:

boss = { hp = 2 * __parent__.hp }

and later:

orc_boss = boss % { __parent__ = orc }

?

Could we use boss(orc) as equivalent to boss % { __parent__ = orc } ?

Some interesting possibility here, just playing around with the notion of a block as first class object with inheritance and parameterization.

And another crazy though: could this language have something akin to list comprehensions but for these blocks so you could compactly define them programmatically where appropriate? This is a contrived example, but Imagine you have a list of strings and you want to create a block that uses those strings as variable names, all set to an initial value of 1.

That is, you want to get

{ foo = 1 bar = 1 baz = 1 }

from var_list = ["foo", "bar", "baz"]

The main challenge seems to be just coming up with syntax for turning strings into variable names. But imagine (just for the sake of example) that ~x meant the variable whose name is the value of x. Then you could (possibly) do something like:

{ ~x = 1 for x in var_list }

This is all just thinking aloud :-)

by : Created on Nov. 30, 2008 : Last modified Nov. 30, 2008 : (permalink)

Endo vs Exo

Yesterday I was starting to get nervous about the path I'd started to go down with the generic groups app for Pinax. I was basically building a single centralized app through which different types of groups would be managed via configuration. I had a good chat with Eric Florenzano about it and we agreed that it just felt wrong but I couldn't think of an alternative.

Then this morning I had a brainwave—a shift in approach that I described on the mailing list as an "exo" approach rather than the previous "endo" approach. Instead of having a single, centralized "groups" app that's highly configurable and lets you plug different pieces in, I realised a better approach would be to simply provide the building blocks for site developers to create their own group apps bottom-up.

The advantage of the "exo" approach is that it makes group customization more like normal Django development. It's more flexible if you want to do some things differently.

After thinking about it some more, it occurred to me that the endo vs exo distinction is quite important when crafting extensible software. It's not that exo approach is always better. It's just different.

The endo approach is that of a framework whereas the exo approach is that of a library. Even a single system like Django may have some aspects that are endo and others that are exo.

An endo approach says to a developer: "we'll provide you the core with slots you can plug your pieces into". An exo approach says to a developer: "we'll provide pieces you can plug together yourself".

In Pinax, django-notifications takes a more endo approach (you register your notification types with the notification subsystem) whereas django-mailer takes an exo approach (it's just there when you want to send mail).

If you need to "register" an entity, an endo approach is probably being used.

If you put your B in the configuration of A then A is endo. Whereas if your B just calls A to do something then A is exo.

Seems a useful distinction. What do people think?

by : Created on Nov. 29, 2008 : Last modified Nov. 29, 2008 : (permalink)

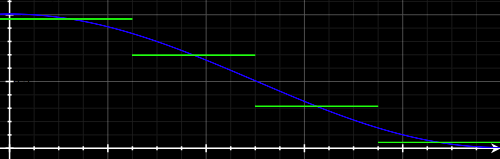

Voronoi Canvas Tutorial, Part III

In Part I, we wrote some code that enabled the user to draw points on a canvas (as long as they weren't too close to an existing point. In Part II we added the drawing of a horizontal line wherever the mouse is.

Next we're going to draw one more type of object which will be at the heart of Fortune's algorithm for Voronoi diagrams. Remember earlier this month in From Focus and Directrix to Bezier Curve Parameters I wanted to be able to calculate quadratic Bézier curve parameters from a focus and horizontal directrix. Now I can explain why :-)

A point (called the focus) and a line (called the directrix) are enough to define a parabola. For Fortune's algorithm, I need to, for each point and for the sweep line, draw the corresponding parabola. The canvas element doesn't have a parabola-drawing primitive. However, it does support Bézier curves.

A quadratic Bézier curve is actually a section of a parabola, so I what I wanted was a way of converting the focus and directrix into the parameters for a quadratic Bézier curve that canvas would understand. That was the motivation for the mathematics in that post.

Implementing those equations in Javascript gives us:

function drawParabola(fx, fy, dy) { var alpha = Math.sqrt((dy*dy)-(fy*fy)); var p0x = fx - alpha; var p0y = 0; var p1x = fx; var p1y = fy + dy; var p2x = fx + alpha; var p2y = 0;

context.strokeStyle = "rgb(100, 100, 100)"; context.fillStyle = "rgba(0, 0, 0, 0.05)"; context.beginPath(); context.moveTo(p0x, p0y); context.quadraticCurveTo(p1x, p1y, p2x, p2y); context.stroke(); context.fill(); }

This not only draws the parabola but fills the region above it (which is relevant to our purpose).

Now all we need to do is run that for each point:

function drawParabolae(dy) { $.each(points, function() { if (dy > this[1]) { drawParabola(this[0], this[1], dy); } }); }

and then add a call to that function to our mousemove handler:

... context.clearRect(0, 0, 600, 400); drawHorizontalLine(oy); drawParabolae(oy); redrawDots(); ....

You can see the result here. I was actually surprised how snappy it is, even with a lot of points. Using the Bézier curve rather than drawing the points manually was definitely the way to go.

If you think about the fact that a parabola is the locus of points equidistance from the focus and the directrix you can start to see how Fortune's algorithm is going to work. I'll make that more explicit in the next and final post.

by : Created on Nov. 29, 2008 : Last modified Nov. 29, 2008 : (permalink)

Bayesian Classification of Pages on This Site

A year ago, in Automatic Categorization of Blog Entries, I talked about automatically categorizing (or at least suggesting categories for) blog posts using a Bayesian classifier.

I finally decided to give it a go, using Reverend.

To train it, all I basically did was:

from reverend.thomas import Bayes

from leonardo.models import Page

guesser = Bayes()

for page in Page.objects.all():

for category in page.categories.all():

guesser.train(category.term, page.content)

Let's pick 10 random blog entries and see how it goes guessing them:

- Pinax Progress III (nothing conclusive)

- The Big 040 (personal 38.7%) CORRECT!

- TeX for Leonardo (leonardo 42.8%) CORRECT!

- Disclosure: Trying Out Google Analytics (nothing conclusive)

- Demokritos 0.1.0 Released (nothing conclusive)

- Programming Languages I've Learned In Order (software craftsmanship 44.8%) CORRECT!

- New Site Look (nothing conclusive)

- Implementing the Unicode Collation Algorithm in Python (nothing conclusive)

- Leopard After Two Weeks (os x 43.5%) CORRECT!

- Film Project Update: A Little More Editing (nothing conclusive)

By "nothing conclusive" I mean that the highest guess was less than 2%. It is interesting that guesses were either < 2% or were around 40% and, in the latter case, they were always correct. So at least no false positives. I wonder what the reason for the false negatives were, though.

Next I tried it against all pages (that had a category). There were 284 cases where no prediction over 5% was made. But in the 288 cases where a prediction over 5% was made, in 287 cases the prediction was correct. In only 1 case was a wrong prediction over 5% made. And it was simply that the classifier thought poincare project should have been tagged "poincare project" :-)

So the precision was basically 100% but the recall 50%.

by : Created on Nov. 29, 2008 : Last modified Nov. 29, 2008 : (permalink)

Voronoi Canvas Tutorial, Part II

In the first part, we implemented the ability to draw dots on the canvas.

Now we're going to draw a horizontal sweep-line where ever the mouse is. The reason for doing this will become clearer in the final two posts.

Here's our function for drawing the horizontal line:

function drawHorizontalLine(y) {

context.strokeStyle = "rgb(200,0,0)";

context.beginPath();

context.moveTo(0, y);

context.lineTo(600, y);

context.stroke();

}

Because we're going to want to clear the canvas every time the mouse moves, we need to be able to redraw the dots. Fortunately, we stored the coordinates in the points array so we can just write:

function redrawDots() {

$.each(points, function() {

drawDot(this[0], this[1]);

})

}

And then finally hook this up to the mousemove event:

$('#canvas').mousemove(function(e) {

var pos = $('#canvas').position();

var ox = e.pageX - pos.left;

var oy = e.pageY - pos.top;

context.clearRect(0, 0, 600, 400);

drawHorizontalLine(oy);

redrawDots();

});

You can see the result after this second stage here.

Now, the fact we have points and horizontal lines might give you a clue as to what's next, particularly in light of a post of mine earlier this month :-)

by : Created on Nov. 28, 2008 : Last modified Nov. 28, 2008 : (permalink)

Broken Bots

This site is being bombarded by requests from a bot of the form:

GET <real_path_to_blog_post>/form.add_comment/

Now, each page does have a form with class="add_comment" and I have jQuery that references $('form.add_comment'). But what kind of broken bot is trying to follow those as links?

That's a rhetorical question. I can tell you the answer:

Mozilla/5.0 (compatible; SkreemRBot +http://skreemr.com)

But I do notice bots accessing all sort of weird URLs. I don't mean looking for exploits—I mean what just appear to be bugs.

It's annoying given each 404 gets emailed to me :-)

by : Created on Nov. 28, 2008 : Last modified Nov. 28, 2008 : (permalink)

Thoughts On A New Language

My favourite rejected/withdrawn Python Enhancement Proposal (PEP) is Steven Bethard's PEP 359 based on an idea by Michele Simionato. That's not to say I disagree with Guido not wanting it in Python, but I like aspects of the idea conceptually as part of a possible Python-like language.

Consider the class statement (take from the PEP):

class C(object): x = 1 def foo(self): return 'bar'

This, as the PEP points out, is equivalent to:

C = type('C', (object,), {'x':1, 'foo':<function foo at ...>})

And more generally:

class <name> <bases>: __metaclass__ = <metaclass> <block>

is syntactic sugar for:

<name> = <metaclass>("<name>", <bases>, <dictionary created by executing block>)

The PEP points out that the class statement nicely avoids the need to mention the name twice and also does the task of executing a block of statements and creating a dictionary of the bindings that result.

It then proposes a make statement of the following form:

make <callable> <name> <tuple>: <block>

that would basically make the class statement syntactic sugar usable for other things. See the PEP itself for a bunch of interesting this this would allow in Python. I certainly think it makes metaclasses clearer.

But my interest isn't so much in Python, but just thinking about a language where something like this is core.

On a related note: think of relationship between Python function definition statements and lambda expressions. One thing I like about Javascript is that the syntax for named and anonymous functions are so similar. One thing I like about Java is that you can have anonymous classes. I wonder if all this could be supported with one core syntax.

This relates to the whole concept of the "dictionary created by executing a block of statements". I find this notion of a "block of statements" being a first-class object appealing. Imagine a function that, instead of having a return value, simply returns its bound local variables. I guess Python modules are pretty much that. But I'm thinking of the notion within a file.

One could argue Python classes are almost that, but they carry with them two additional features—inheritance and instantiation—that would be, I think, interesting to separate out.

Inheritance could be separate and could be a general property of dictionaries. I think it would be interesting and potentially useful to have dictionaries with bases. Of course it's possible to implement such a notion in Python now (see my final remark in this post).

Because of these other characteristics of inheritance and creating a dictionary from executing a block of statement, Python classes can be useful without ever instantiating them. So I think it would be interesting to have a language where instantiability is just another characteristic that can be added on to a dictionary. Of course, Python lets you do that to some extent now with special methods such as __new__ and __call__.

Which leads me to my final thoughts. While I still think it would be fun to explore a language whose fundamental concepts are built along the lines I've been talking about, I think it is worth noting that Python does pretty much let you implement things this way right now. The biggest takeaway for me from Guy Steele's talk was that a good language is one that can take common patterns and turn them in to things that look like primitives of the language. You just need to look at most Python ORMs to see an excellent example of that.

UPDATE: Now see More Thoughts on a New Language

by : Created on Nov. 28, 2008 : Last modified Nov. 28, 2008 : (permalink)

Voronoi Canvas Tutorial, Part I

Earlier in the month, I introduced Voronoi Diagrams with some Python code for brute-force calculation. There are a number of better algorithms and I'd like to talk about one discovered by Steven Fortune. Rather than implement it in Python, though, I wanted to use it as an opportunity to teach myself how to use the canvas element to build an interactive demonstration of Fortune's approach.

So this is part one (of three four) showing how to use the canvas element (in conjunction with jQuery) to demonstrate Fortune's algorithm for calculating Voronoi diagrams. In this part, we'll just do enough to let the user pick the points.

The canvas element was originally developed by Apple but is now implemented not only in Safari but also Firefox and Opera). It is part of the HTML5 effort.

So first of all, here's our HTML:

<canvas id="canvas" width="600" height="400"

style="border: 1px solid #999;"></canvas>

<div><button id="clear-button">clear</button></div>

Next, we'll declare an array called points which will store the (x, y) coordinates of our points.

var points = [];

We don't want the user to draw points too close to one another, so anyClose is a utility function that tells us if a given (x, y) is too close to an existing point. It in turn uses a utility function distance which calculates the distance between any two points. Note that anyClose uses jQuery's each to iterate over the points.

/* calculate distance between (x1, y1) and (x2, y2) */

function distance(x1, y1, x2, y2) {

return Math.sqrt((x2 - x1) * (x2 - x1) + (y2 - y1) * (y2 - y1));

}

/* are there any points close to (x, y) ? */

function anyClose(x, y) {

var result = false;

$.each(points, function() {

if (distance(x, y, this[0], this[1]) < 20) {

result = true;

return false; // break out of each

}

});

return result;

}

Now we get to the actual canvas bits. Operations are performed on a drawing context which we can get in jQuery with:

var context = $('#canvas')[0].getContext('2d');

Here is a function for drawing a black dot at (x, y):

function drawDot(x, y) {

context.fillStyle = "rgb(0,0,0)";

context.beginPath();

context.arc(x, y, 2, 0, Math.PI*2, true);

context.fill();

}

All that remains now is to hook up our event handlers. First, the click event on #canvas:

$('#canvas').click(function(e) {

/* e will give us absolute x, y so we need to

calculate relative to canvas position */

var pos = $('#canvas').position();

var ox = e.pageX - pos.left;

var oy = e.pageY - pos.top;

/* only draw dot and add to points list if

no other points are close */

if (!anyClose(ox, oy)) {

drawDot(ox, oy);

points.push([ox, oy]);

}

return false;

});

And second, the clear button:

$('#clear-button').click(function() {

points = [];

context.clearRect(0, 0, 600, 400);

});

You can see the result here.

by : Created on Nov. 27, 2008 : Last modified Nov. 27, 2008 : (permalink)

Song Project: Fattening Things Up

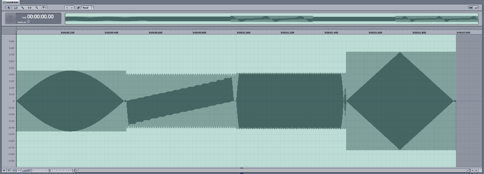

So this is where we left off our song project:

download if embed doesn't work

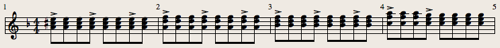

To me it feels a bit thin. To fatten it up, we're going to add a nice phat synth and crunchy guitar. Here's the synth riff:

And here is what it sounds like:

download if embed doesn't work

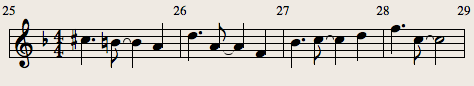

Now here's the guitar riff:

It's just the chords played either as open fifths or octaves with a lick at the end. Notice, though, that (except for the last measure) it's accented 2+3+3 in contrast to the 3+3+2 of things like the piano. These cross rhythms just make things a little more interesting. Here is what it sounds like:

download if embed doesn't work

So here is the combined version with all the instruments so far.

download if embed doesn't work

This will be the instrumental part of our verse.

It's often hard when first adding a instrument or two not to put it too loud in the mix because you are enamoured by its novelty (I'm saying this from experience). But with the tracks we've just added, it is important that they are fairly subtle. You don't want them to draw too much attention. They can still be soft but noticable in their absence. To see this, compare the final recording with the one at the top of the page. I think you'll agree there's a big difference.

In subsequent posts, we'll add the chorus, build out the song's structure and add some vocals.

All material for this project is made available under a Creative Commons BY-NC-SA license so you are free to redistribute and remix with attribution but under the same license and not commercially.

by : Created on Nov. 27, 2008 : Last modified Nov. 27, 2008 : (permalink)

Church Encoding in Python

If we define true and false with the following combinators (in Python):

TRUE = lambda a: lambda b: (a) FALSE = lambda a: lambda b: (b)

then if-then-else can be implemented simply by applying a predicate to two arguments: the then/true case and the else/false case.

For example:

(TRUE)(True)(False) == True (FALSE)(True)(False) == False

Now if we define:

AND = lambda a: lambda b: (a)(b)(a) OR = lambda a: lambda b: (a)(a)(b) NOT = lambda a: lambda b: lambda c: (a)(c)(b)

we can do boolean logic with only reference to function application.

For example:

(AND)(TRUE)(FALSE) == (FALSE)

This is a little hard to verify in Python so we can use our if-then-else trick:

(AND)(TRUE)(FALSE)(True)(False) == False

Our definition of TRUE and FALSE is known as the Church encoding of the booleans.

We can also Church-encode a pair, or cons, and define car and cdr appropriately:

CONS = lambda a: lambda b: lambda c: (c)(a)(b) CAR = lambda a: (a)(TRUE) CDR = lambda a: (a)(FALSE)

If the definitions of CAR and CDR seem odd, note that the magic is really in CONS.

(CAR)((CONS)(1)(2)) == 1 (CDR)((CONS)(1)(2)) == 2

But this means CONS makes a nice way of deferring our (True)(False) trick to "unchurch" Church-encoded booleans into Python booleans:

UNCHURCH_BOOLEAN = (CONS)(True)(False)

Now we can say:

(UNCHURCH_BOOLEAN)((NOT)(TRUE)) == False (UNCHURCH_BOOLEAN)((OR)(TRUE)(FALSE)) == True

The natural numbers can also be Church-encoded:

ZERO = FALSE SUCC = lambda a: lambda b: lambda c: (b)((a)(b)(c))

We can then define:

ONE = (SUCC)(ZERO) TWO = (SUCC)(ONE) THREE = (SUCC)(TWO) FOUR = (SUCC)(THREE)

and so on. Here's a little Python function for "churching" numbers:

def church_number(n): return SUCC(church_number(n - 1)) if n else FALSE

We can define addition, multiplication and exponentiation as follows:

PLUS = lambda a: lambda b: lambda c: lambda d: (a)(c)((b)(c)(d)) MULT = lambda a: lambda b: lambda c: (b)((a)(c)) EXP = lambda a: lambda b: (b)(a)

Of course, what would be nice is if we had an easy way to unchurch our Church-encoded numbers so we could see if these work. Well, it turns out that's easy to do:

UNCHURCH_NUMBER = lambda a: (a)(lambda b: b + 1)(0)

So

(UNCHURCH_NUMBER)(ZERO) == 0 (UNCHURCH_NUMBER)(ONE) == 1 (UNCHURCH_NUMBER)(TWO) == 2

and so on.

(UNCHURCH_NUMBER)((PLUS)(THREE)(TWO)) == 5 (UNCHURCH_NUMBER)((MULT)(THREE)(TWO)) == 6 (UNCHURCH_NUMBER)((EXP)(THREE)(TWO)) == 9

by : Created on Nov. 26, 2008 : Last modified Nov. 26, 2008 : (permalink)

On Insurance

If I offered you a 1 in 2 chance of winning $1 for 60 cents you'd probably say no. After all, the expected value is 50 cents so you're more likely to lose than win.

And yet, if I offered you a 1 in 2,000 chance of winning $1,000 for 60 cents, you might agree. The expected value is the same, but the payoff (even though far less likely) is much higher. If I offered you a 1 in 20,000 chance of winning $10,000, you might be even more like to give it a go "just in case" you win.

Lotteries and casinos rely on the fact that, even with big payoffs, things will average out in their favour.

Now consider insurance...

If there's a 1 in 2 chance an event might cost you $1, you're unlikely to take out insurance with a premium of 60 cents. However, if there's a 1 in 2,000 chance an event might cost you $1,000, you are more likely to be willing to be willing to pay a premium of 60 cents. Even if an event has only a 1 in 20,000 chance of happening, if it would cost you $10,000, you might consider paying a premium of 60 cents for insurance. If the 1 in 20,000 event would cost you $1,000,000 you might be willing to pay $60 or more in premium.

An insurance company (or even non-profit cooperative) is no going to offer you a premium less than the expected value. If there is a 1 in 20,000 chance they'll have to pay out $1,000,000 you're going to have to pay at least $50 in premiums.

The important point here is that insurance only makes sense if the likelihood of needing it is low. If the need is high, the premium will be close to the payout and, it's probably just not worth it. (Literally "probably" :-)

Insurance is about trading off an unlikely high cost for a definite low cost.

What about a situation where the event is almost certainly going to happen? In such a case, insurance is going to cost more than its worth. If it is known I'm going to have an event which will cost me $1,000, no insurance company is going to cover me for a premium less than $1,000.

And yet, people take out health insurance which covers highly probably or even certain events.

If your insurance covers an annual checkup, or new glasses every three years or a teeth clean every six months, or a regular therapy appointment: IT'S GOING TO COST MORE THAN IT'S WORTH.

In the case of predictable but not-that-frequent events, a case can definitely be made for trading off an infrequent (but likely) high cost for a frequent low cost. But this is NOT insurance. It's really just a type of amortization.

The fact that likely events get bundled in with real insurance is one of the reasons, I think, why health insurance is so expensive in the US.

POSTSCRIPT: A while ago I read about people in India who offer you insurance against the fine for riding on the train without a ticket. The cost of taking the insurance is less than the cost of the ticket, so morals aside, you're better off taking the insurance and not buying a ticket. Clearly this is a price signal that the ticket-to-fine ratio is higher than the chance of getting caught and either the ticket price should be lowered, the fine increased, or the chance of catching culprits be increased.

by : Created on Nov. 26, 2008 : Last modified Nov. 26, 2008 : (permalink)

Steele's Growing a Language is a Masterpiece

If you are at all interested in differences between programming languages and the trade offs a programming language designer must make, and if you haven't already seen it, you really must watch Growing a Language, Guy Steele's Keynote from the 1998 OOPSLA conference.

Not only is the content fascinating, but there's a wonderful twist he starts to reveal at 7:45 (through to 9:00) to do with the whole style of the presentation (which does seem odd, though humorous, at first). It's like something out of Gödel, Escher, Bach where the structure, as much as the surface content, is where the message lies.

I don't want to say too much more, lest I give things away, but the talk really is a masterpiece.

by : Created on Nov. 25, 2008 : Last modified Nov. 25, 2008 : (permalink)

First Pinax Screencast Competition

The goal is to produce a screencast up to 20 minutes long, showing what can be built with Pinax in a short amount of time.

You have 3 weeks to submit your entry or entries and then the core developers of Pinax will judge the best entries. You can submit as many times as you like.

Judging Criteria

Submissions will be judged on three criteria with certain weightings:

- the overall video and audio quality of the screencast (20%)

- how impressive the end result of what you build is (30%)

- how well it shows off Pinax (50%)

You can have some stuff developed in advance (i.e. the entire site you build doesn't have to be built in 20 minutes) but that's a tradeoff you have to make between the second and third criteria. More done in advance means probably a more impressive end result but shows off Pinax less; more you actually do during the screencast, might mean a less impressive result at the end but shows off Pinax more.

License

We require all videos to be made available under a Creative Commons Attribution-Share Alike license so we can distribute them in edited and unedited form.

Prize

The first prize is a $100 Amazon Gift Card with a runner up prize of a $40 Amazon Gift Card. Even though you can submit more than one entry, the same person won't be able to win both prizes (even if they have the 1st and 2nd best submissions).

See http://contests.pinaxproject.com/contest/3/

by : Created on Nov. 24, 2008 : Last modified Nov. 24, 2008 : (permalink)

EQ, Part I

In the previous song project post, I mentioned that the tracks had some EQ on them. There are a number of different reasons for using EQ:

- on individual instrument tracks to bring out particular characteristics of that instrument

- on individual instrument tracks to make multiple instruments sit better together

- on the overall mix to reduce listener fatigue

- on the overall mix to make up for deficiencies in the listening environment

The third is normally done at mastering stage and the fourth by both mastering engineers (preempting deficient listening environments) and the listeners themselves. I'm not going to talk about either of them here.

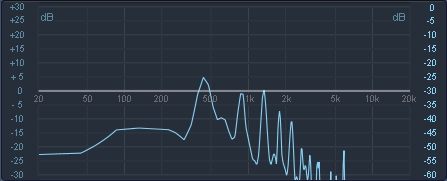

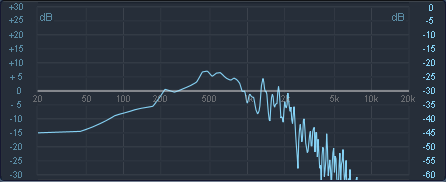

Here is a graph showing the frequencies coming from the particular piano sound I'm using when I play an A.

Note that the main peak is that the fundamental frequency of 440Hz. There are also bumps at multiples of 440: 880Hz, 1320Hz, 1760Hz and so on. It is the amplitude of those overtones and how they change over time that really makes the note sound like a piano and not something else. Notice that there are also other little bumps—lots is going on: from other strings resonating to the actual sound of the hammer hitting the string (as opposed to just the string vibration itself).

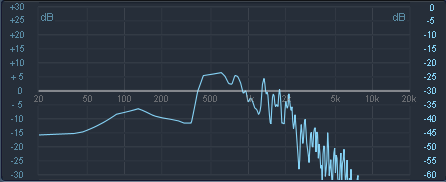

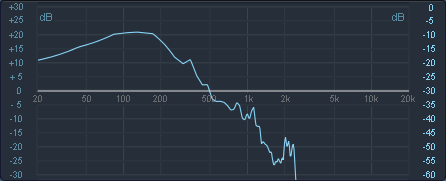

But typically you aren't just playing a single note. Here is the same graph for the right hand piano riff from the song:

The important thing here is just the range in which the frequencies occurs.

And here is the full piano riff, both left and right hands:

Because the piano parts are fairly high, you see there isn't much happening below 220Hz (which is A2, the lowest note the piano plays in the song).

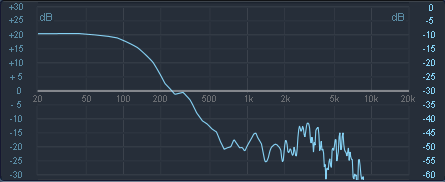

If you compare that to the bass guitar riff:

you'll notice most of the action is happening between 55Hz and 220Hz. But notice that there are other bumps as well. Boosting in that range will change how much those other sounds with come through.

I'm still a novice at mix engineering but one thing I've definitely learnt is that you want to treat different drums separately during EQ. In particular you want to EQ your kick and snare separately.

Of course, drums aren't playing a pitch but they have a very complex set of frequencies and lots going on at different parts of the spectrum.

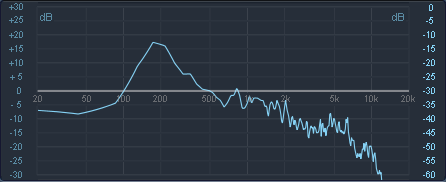

Here's the raw analysis of the kick drum:

Notice most of it is sub-100Hz but there is significant stuff going on above 1000Hz (other vibrations beside the skin, the initial hit sounds as opposed to the sound that reverberates in the chamber)

Here's the snare for comparison:

You can see the main frequency is around 200Hz but there are other bumps caused by things like the actually sound of the stick hitting the skin. In a subsequent post, I'll include sound samples so you can here the effect of boosting different frequencies.

In additional to controlling the sound of the individual instruments, there is also the relationship between the instruments as I mentioned at the start. In this particular song, the piano doesn't go low enough to really muddy the bass, but if it did, I might want to attenuate the low frequencies of the piano. Making sure the snare comes through is important, so I could boost it around 200-300Hz or narrowly scoop out that frequency from other instruments.

In the recordings you've heard so far, the piano does have a drop off below 200Hz (even though it doesn't really need it); the snare is boosted at 200Hz and 800Hz; the kick drum is boosted at 60Hz, 1500Hz and 3500Hz but attenuated at 200Hz; and the bass guitar is boosted at 80Hz. These are by no means final numbers; more of a default to use as a starting point.

And there's a whole other dimension to all this one vocals are added :-)

by : Created on Nov. 23, 2008 : Last modified Nov. 23, 2008 : (permalink)

Preformatting Comments that Still Wrap

For a while, it's frustrated me (and I'm sure some of my commenters) that indentation in comments is lost, particularly given the code snippets people often paste in.

I didn't want to make all comments pre-formatted so my first thought (which I implemented locally) was to add a boolean field on comments where a user could elect to have their comment pre-formatted. That would be annoying for the non-code parts of their comments, though. So my second thought (which I also implemented locally) was to have a toggle where a comment could alternatively be viewed as pre-formatted or not.

But in tweaking that I was reminded of the CSS2 property white-space. In particular, the value pre-wrap which honours multiple consecutive whitespace characters but still wraps to fit in the required width of the box. According to quirksmode.org it's not understood by FF2 or IE6/7. A value of pre might work in that case but the wrapping part of pre-wrap is really nice.

You can see it in action in the first comment of First Success With Combinatory Python (unless you are running FF2 or IE6/7).

If anyone knows of a simple fallback to pre I can do for those browsers, I would be interested in trying it.

by : Created on Nov. 23, 2008 : Last modified Nov. 23, 2008 : (permalink)

First Success With Combinatory Python

In More Questions on the Path to Combinatory Python I wondered how to write an 'add' function such that not only

(add)(2)(2) == 4

worked but also things like:

(add)(add)(1)(2)(3) == 6 (add)(1)(add)(2)(3) == 6

If we throw in a unary function like 'neg' then things like this should work too:

(neg)(5) == -5 (neg)(neg)(5) == 5 (add)(3)(neg)(2) == 1

Here's how I started to think about it. An expression such as (add)(2)(2) could be described as having the signature "200". It consists of subexpressions requiring, respectively 2, 0 and 0 arguments. Just (add)(2) would have a signature "20" which is equivalent to a signature of 1.

Using this notion:

- (add)(add)(1)(2)(3) has signature 22000

- (add)(1)(add)(2)(3) has signature 20200

- (neg)(5) has signature 10

- (neg)(neg)(5) has signature 110

- (add)(3)(neg)(2) has signature 2010

It is easy to see that for an expression to be complete (i.e. not partial) it must have a signature ending in 0. But there is slightly more to it that than. Basically, start off with a score of 1 and work from left to write, every "2" you encounter increases the score by 1 and every "0" decreases it by 1. A "1" has no effect. When you hit a score of 0 you're complete.

Every complete signature has a particular bracketing of function application. For example, expressions of signature 112100 can be evaluated as follows:

def eval112100(a, b, c, d, e, f): return a(b(c(d(e))(f)))

and each partial signature has a function that can dispatch evaluation to another function depending on the number of args the next arg takes (relying on a function like add being annotated with add.args = 2).

def eval1121(a, b, c, d): return lambda e: ( eval11210 if e.args == 0 else eval11211 if e.args == 1 else eval11212 if e.args == 2 else ... )(a, b, c, d, e)

I was still struggling to implement this in a recursive way that could handle unlimited depth and then I saw in a comment that Eric Wald came up with a solution:

def combinatoric(n): def decorator(f): @wraps(f) def wrapper(x): if callable(x): return lambda y: combinatoric(n)(f)(x(y)) elif n > 1: return combinatoric(n - 1)(wraps(f)(partial(f, x))) else: return f(x) return wrapper return decorator

and so you then say

@combinatoric(1) def neg(x): return -x

@combinatoric(2) def add(x, y): return x + y

and it all works. The use of wraps is optional but including it means partial add will have the name add not wrapper.

As Eric points out in his comment, this still doesn't solve the (add)(M)(5) issue but I have some thoughts on that for a subsequent post.

by : Created on Nov. 22, 2008 : Last modified Nov. 22, 2008 : (permalink)

Relations with Python Named Tuples

Back in 2006, I wrote an entry called Python Tuples are Not Just Constant Lists which, after the Dr Horrible covers is my most visited blog post ever.

In it, I suggest that:

the index in a tuple has an implied semantic. The point of a tuple is that the i-th slot means something specific. In other words, it's a index-based (rather than name based) datastructure.

In that same post, I pointed out the connection between the relational algebra and this notion of a tuple and further suggested that:

it might be useful to have the notion of a tuple whose slots could additionally be named and then accessed via name.

I implemented aspects of this in my initial explorations of relational python. Basically a relation in relational algebra is a set of dictionaries (called tuples) where each dictionary has identical keys (called attributes). In Basic Class for Relations, I actually use Python tuples internally but they go in and out as dictionaries. As I said in that post:

Basically, I store the each tuple internally as a Python tuple rather than a dictionary and the relation also keeps an ordered list of the attributes which is used as the index into the tuples. Amongst other things, this gets around dictionaries not being hashable. It's also a storage optimization akin to using slots for Python attributes.

Here is a slightly cleaned up version of my code at the time:

class Rel: def __init__(self, *attributes): self.attributes_ = tuple(attributes) self.tuples_ = set() def add(self, **tupdict): self.tuples_.add(tuple([tupdict[attribute] for attribute in self.attributes_])) def attributes(self): return set(self.attributes_) def tuples(self): for tup in self.tuples_: tupdict = {} for col in range(len(self.attributes_)): tupdict[self.attributes_[col]] = tup[col] yield tupdict

One could then say stuff like:

rel1 = Rel("ENO", "ENAME", "DNO", "SALARY") rel1.add(ENO="E1", ENAME="Lopez", DNO="D1", SALARY="40K") rel1.add(ENO="E2", ENAME="Cheng", DNO="D1", SALARY="42K") rel1.add(ENO="E3", ENAME="Finzi", DNO="D2", SALARY="30K")

and in subsequent posts I started to show how some relational operations could be performed on this datastructure.

Well, now in Python 2.6, some of this can be simplified. Python 2.6 introduced a wonderful new collections type called a named tuple — a tuple whose slots can also be addressed by name.

Now I can do something similar to Rel above as follows:

from collections import namedtuple class Rel: def __init__(self, typename, field_names): self.tuple_type = namedtuple(typename, field_names) self.tuples = set() def add(self, **tupdict): self.tuples.add(self.tuple_type(**tupdict)) def attributes(self): return set(self.tuple_type._fields)

and use it as follows:

rel1 = Rel("EMPLOYEE", "ENO ENAME DNO SALARY") rel1.add(ENO="E1", ENAME="Lopez", DNO="D1", SALARY="40K") rel1.add(ENO="E2", ENAME="Cheng", DNO="D1", SALARY="42K") rel1.add(ENO="E3", ENAME="Finzi", DNO="D2", SALARY="30K")

then:

>>> rel1.attributes() set(['SALARY', 'ENAME', 'DNO', 'ENO']) >>> rel1.tuples set([EMPLOYEE(ENO='E1', ENAME='Lopez', DNO='D1', SALARY='40K'), EMPLOYEE(ENO='E3', ENAME='Finzi', DNO='D2', SALARY='30K'), EMPLOYEE(ENO='E2', ENAME='Cheng', DNO='D1', SALARY='42K')]) >>> for employee in rel1.tuples: print employee.ENO, employee.ENAME E1 Lopez E3 Finzi E2 Cheng

by : Created on Nov. 21, 2008 : Last modified Nov. 21, 2008 : (permalink)

More Questions on the Path to Combinatory Python

I was just thinking yesterday that I need to get back to the posts I was intending to make after my initial post of the month, Two Fun(ctional) Questions. Then tonight Eric Florenzano made an excellent post on Lambda Calculus which is related to my topic of interest.

So let me take one of his examples and raise again the issue from my first post.

First of all, consider the following Python function taking two arguments:

>>> add_ = lambda x, y: x + y

This function works as expected:

>>> add_(5, 4) 9

Eric shows a curried version defined in Python:

>>> add = lambda x: lambda y: x + y

and shows how, when given one number, this returns a function that takes a second number and adds it to the first:

>>> add(5)(4) 9

To emphasize my desire to blur the distinction between functions and constants, I'd like to point out the following works too:

>>> (add)(5)(4) 9

But imagine we want to add three numbers. With our two-argument version, we could say:

>>> add_(5, add_(2, 2)) 9

How would we do that with our curried version? I'm NOT talking about defining a new 'add', I'm talking about using multiple applications of the original 'add' just like we did with 'add_' above.

The following works:

>>> (add)(5)((add)(2)(2)) 9

but note the additional parentheses we had to add. If we wanted to instead defer the application of the final argument we'd have to rewrite it as:

>>> (add)((add)(5)(2)) <function <lambda> at 0x755f0> >>> (add)((add)(5)(2))(2) 9

So the question is: is there a way to modify add (hopefully with just a wrapper that could be applied to any unary function) such that the following work:

(add)(5)(add)(2) (add)(5)(add)(2)(2)

where the former is equivalent to (add)(7) and the latter returns 9?

And is this pretty much the same question I asked in my original post with the very same solution(s)?

By the way, here is another example that should work in any true solution...

If I define

>>> M = lambda x: (x)(x)

then we should be able to get

(add)(M)(1)

to return 2.

Or is this whole hybrid approach destined to failure and the only real way to use combinators is with Church-encoded numerals?

by : Created on Nov. 20, 2008 : Last modified Nov. 20, 2008 : (permalink)

Post Length By Month

I made the comment in my Half Time Report that I think I've managed to stick to "posts no shorter than normal and no longer".

Alas the data don't match that.

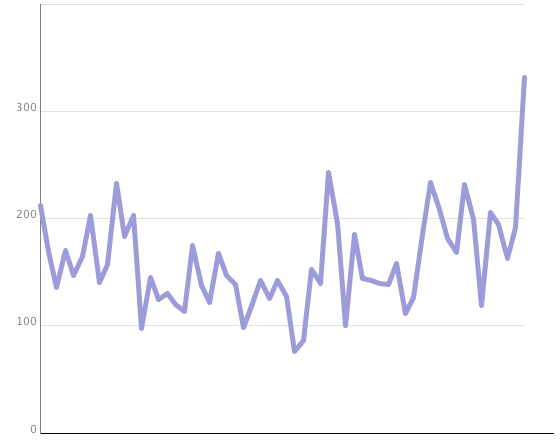

Here's a graph of average post length (just len(page.content.split())) by month:

Clearly the posts this month are longer on average. And because I'm writing more of them, the last point of this next graph is no surprise.

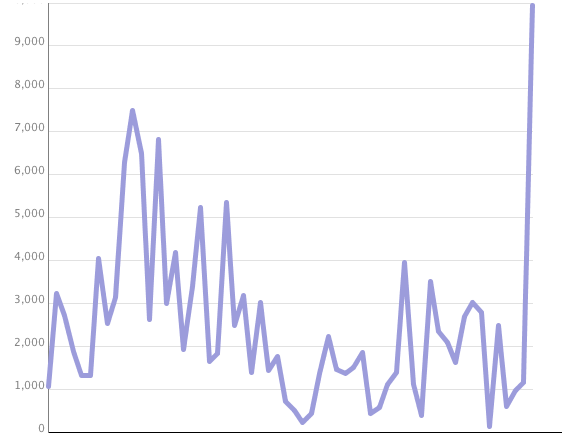

This is a graph with post lengths totalled for each month:

And obviously this month has a fair bit to go. What's more interesting though is my first year of blogging saw growth in total words posted per month grow to a peak around 7500 in December 2004 and then a clear trend down to the trough in August 2006 when I posted just 3 short posts the whole month.

These graphs were done with IBM's Many Eyes simply because Apple Numbers fails miserably at charting anything but the smallest data sets.

by : Created on Nov. 20, 2008 : Last modified Nov. 20, 2008 : (permalink)

The Long and Short of Mathematics

I've previously talked about Oxford's Very Short Introduction series. My first introduction to it (via a recommendation from Greg Mankiw) was Timothy Gowers' Mathematics: A Very Short Introduction which is the best little (160 page) book I've ever read on what mathematics is really about.

A few weeks ago, I bought The Princeton Companion to Mathematics which weighs in at 1008 pages. It's sweeping vista of pure mathematics, and probably the best big book I've ever read on pure mathematics in general. It provides survey articles on many different areas within pure mathematics from both a conceptual and historical viewpoint. I would say most of the book requires some college-level background in mathematics and some sections would best suit graduate students (although to give them breadth rather than depth) but it's the kind of book that you can dive in to at any point and learn something.

So it's interested that the editor of the PCM is the same Timothy Gowers that wrote the Oxford Very Short Introduction.

Well done, Professor Gower. You have succeeded in producing what I think are the best small and large single volume books on mathematics.

Just like in Greek Lexicography we have the "Little Liddell", "Middle Liddell" and "Big Liddell" (referring to the abridged, intermediate and full versions of Liddell and Scott's A Greek-English Lexicon) I think these books should be known as "Little Gowers" and "Big Gowers" :-)

by : Created on Nov. 19, 2008 : Last modified Nov. 19, 2008 : (permalink)

Discrete Cosine Transforms Part 1

I've often been intrigued by the lossy part of JPEG compression so I thought I'd explore Discrete Cosine Transforms and their use in JPEG as a short multi-part blog series.

In this part, lets just talk about what a "discrete cosine" function is and then in the next couple of posts look at how the concept can be combined with basic linear algebra to break up images into components in such a way that you can throw out some components with minimal effect on the perceived image. It's quite clever but the mathematics is fairly straightforward.

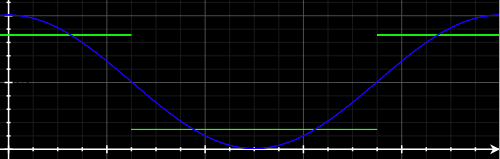

Let's start with a single cycle of a cosine function shifted up and scaled so its values range from 0-255 instead of from -1 to +1. To make it discrete, we'll divide it up into four and simply take the value of the scaled cosine function at the midpoint of each of our four sections:

Here our four discrete values are 217, 39, 39, 217 .

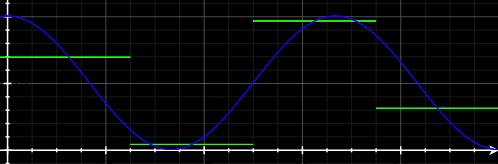

Now let's do the same for one and a half cycles:

which yields values of 176, 11, 245 and 80.

Now half a cycle:

which yields 245, 176, 80 and 11 and zero cycles:

which gives us 255, 255, 255, 255

We've basically calculated values for 0 thru 3 half cycles. If we wanted to split the cosine into N pieces instead of 4, we'd calculate values for 0 thru N-1 half cycles.

But, in summary, for N = 4:

- k = 3: 176, 11, 245, 80

- k = 2: 217, 39, 39, 217

- k = 1: 245, 176, 80, 11

- k = 0: 255, 255, 255, 255

by : Created on Nov. 18, 2008 : Last modified Nov. 19, 2008 : (permalink)

Fixing Relative Links In Entries

Occasionally I hear from someone who finds the links in my entries are broken in their feed reader, especially if they are reading a syndicated version of my entries.

The reason is that I have relative links which are not being made absolute by the reader (or syndication code).

Many readers (such as Google Reader) treat relative links as being relative to the feed itself, so /blog/ gets converted to http://jtauber.com/blog/. But even if the reader does, this, that doesn't help with syndicated entries unless the syndicator does some processing. Some do but others don't.

There's nothing wrong with relative links in Atom content, but if you use them you really should have an xml:base attribute to help a reader deal with them properly. I've now updated all my entries to include an xml:base. I'll see how many planets and other syndications pass that through when placed on the entry. I wonder if the content element would be better.

In the process of making the change, though, I noticed my entry/link[@rel="alternative"] were also relative which is, I suspect, a no no. So I've made them absolute as well.

I'm going to do a little bit more experimentation, but things should work now. I may still have goofed up somewhere but I'm fairly confident now that if links are broken then it's either a bad reader or bad syndication involved. Either way, please let me know in a comment below if you encounter any problems here on in.

UPDATE 1: The atom feed of the unofficial planet python doesn't pass through the xml:base but it doesn't need to as it has made all links absolute (and was doing so before my change)

UPDATE 2: I'm afraid that Advogato's syndication is just plain broken. In their RSS 2.0 feed (why not provide Atom!?), they make content links absolute but they do so by treating them relative to www.advogato.org !! My only suggestion is to just avoid Advogato syndication all together. I'm tempted to turn it off.

by : Created on Nov. 17, 2008 : Last modified Nov. 17, 2008 : (permalink)

Context Hierarchies in OmniFocus

I'm giving OmniFocus another chance after I found it didn't stick after I was a beta tester. I actually switched to Things for a while but that didn't stick either. Anyway, that's not actually what I wanted to blog about.

I want to talk about the use of hierarchy in contexts because I've just been reminded of one confusion I had during the beta. I should point out up front, this isn't really intended as a dig at OmniFocus specifically, more a general reflection on the nature of hierarchy and containment versus subsumption. Still with me?

OmniFocus supports GTD-style contexts such as @Online and @Email and also lets you group those contexts into a hierarchy so @Online and @Email can both be under a parent context @Computer.

As one might expect, the parent context subsumes its children. If you are @Online then you must also be @Computer, but you may be @Computer but not @Online (say while on a plane).

In light of this, the behaviour of OmniFocus is odd and almost the complete opposite of what one might expect (or want).

If you put an action in @Online it will appear not only when you set your current context to be @Online but also when you set your context to the more general @Computer. And if you put an action in @Computer, it will only appear if you are @Computer and not if you select the more specific @Online.

To me, this is completely backward. If I am @Online (and, by implication, therefore @Computer) then really I should have available to me @Computer actions as well as @Online actions. Similarly, if I wish to state I am @Computer (and therefore am either not @Online or am leaving whether or not I am unspecified) then I would not want to see those actions that require me specifically to be @Online.

The only conclusion one can draw is that the hierarchy of context in OmniFocus (or other similarly behaving GTD apps) is not about subsumption at all. If you make @A a parent of @B you are not in fact saying that @B implies @A or that @B is a special case of @A. If you use the hierarchy with that implication, it won't work at all. Instead, I think all you are saying is that @A is just a grouping of @B and other contexts you might, from time to time, want to look at together, and not a real context in its own right.

Of course, if you really want to model subsumption, you need a lattice, not a tree.

by : Created on Nov. 17, 2008 : Last modified Nov. 17, 2008 : (permalink)

Half Time Report

We're just over half way through the month so I'd thought I'd give a quick review of how blogging month is going.

First of all, I've successfully written every day and I think I've managed to stick to the sort of stuff I would have blogged about anyway—posts no shorter than normal and no longer.

I've actually written 25 posts (not counting this one) which is appropriate given that the NaNoWriMo folks should be at 25,000 words around now.

At some point, I'll actually do a word count which should be pretty easy in Django :-)

by : Created on Nov. 16, 2008 : Last modified Nov. 16, 2008 : (permalink)

Song Project: Adding Bass and Drums

Let's take the piano riff I previously wrote and add a bass line. This was entirely improvised but you'll hear it's very rhythmically similar to the left-hand of the piano part, even down to the 3+3+2 accents except in the third bar of four where 3+2+3 is suggested.

download if embed doesn't work

And now let's add the drums. I took a standard kick, snare and high-hat pattern that comes with Logic Pro and modified it to give a machine-gun rhythmic interplay between both high-hat and kick drum.

download if embed doesn't work

While the interplay between kick and high-hat makes for an interesting rhythm, we'll eventually want to vary it subtly throughout the song to keep things more interesting. We'll worry about that later, though.

Here's a combined version with piano, bass and drums:

download if embed doesn't work

Note that all tracks had some compression and EQ. In a later post I'll talk a bit about that.

Then we'll add a few more tracks before moving on to composing the chorus, putting together the overall song structure and, of course, adding the vocals.

All material for this project is made available under a Creative Commons BY-NC-SA license so you are free to redistribute and remix with attribution but under the same license and not commercially.

by : Created on Nov. 16, 2008 : Last modified Nov. 16, 2008 : (permalink)

Category Feeds Available

I finally got around to doing something I've wanted to do for ages, only to discover it could be done in all of fifteen minutes. I'm talking about per-category feeds on jtauber.com

For over a year, my django-atompub has supported the parameterized feeds that django's built-in syndication feeds do but I never made use of them myself.

Fifteen minutes and roughly 20 lines of code later, the site now has a separate feed for each category. If you go to a page that is also a category, such as python or django or music theory or filmmaking or poincare project there will both be an extra feed advertised in the html head and a link from the "feed icon" after the list of "Pages in this category". So if you are only interested in a particular topic you can just subscribe to that (although I hope you don't—I did this more for topical aggregators)

One thing I always struggled with when thinking about category feeds before was how to handle subsumption relationships. If I put something in django should it automatically go in python? If in python then in some broader software category? or computing category? I certainly want to avoid the assumption of hierarchy (see some previous posts on that topic).

So for now I've left categories with no additional structure.

by : Created on Nov. 15, 2008 : Last modified Nov. 15, 2008 : (permalink)

Getting Xcode to Work on Mac OS X 10.5.5

This morning I tried to start up Xcode but it crashed on startup.

The console revealed:

layout bitmap too short: ASKScriptView

After a bit of searching I found this blog post. Although the main post wasn't quite relevant, one of the commenters described my problem:

Xcode 3.1 has been crashing for me since the 10.5.5 update with an error saying "layout bitmap too short: ASKScriptView". I couldn't find reference to this online and was completely stumped.

Another commenter said:

just get the '/usr/lib/libobjc.A.dylib' from a 10.5.4 system (Look for it in a 10.5.4 combo updater file) and swap it with the newer version. Then, it works again.

So I searched on Apple's site for the Mac OS X 10.5.4 Combo Update.

But then I was stuck with a 561MB .pkg I just needed extract one file from and I had no idea.

More searching revealed the shareware Pacifist from CharlesSoft which I promptly downloaded. It worked like a charm and let me extract just the libobjc.A.dylib I needed.

Now Xcode starts up fine! Sending my $20 in to Charles...

by : Created on Nov. 14, 2008 : Last modified Nov. 14, 2008 : (permalink)

RED Changes the Game Again

Back in 2005, I talked about the vague announcement of an upcoming a 2540p camera based on a full frame 4K CMOS from a company founded by the founder of Oakley. The camera, RED ONE, was launched in 2007 and was viewed by many as a game changer in digital cinematography.

Now RED is changing the game again. They've just announced a new modular system that separates out the sensor, lens mount, I/O, recording module, battery, viewfinder, remote, etc all as separate components you can mix and match. They also announced a line of sensor modules (what they call 'brains') that I'll talk about in a moment.

I'm blown away just like I was in 2005 and there is some nice tech pr0n at http://www.red.com/epic_scarlet/ for you to look at to see what I'm talking about.

In addition to the modular approach, which I find very compelling, the other thing that blew me away is a couple of the 'brains' they offer and their sensors.

I've talked about sensor sizes before. Most consumer video cameras have 1/3" sensors. Professional video cameras are generally 2/3". Even Star Wars Episode II was shot on 2/3" cameras capable of 1080-line resolution. Remember the 1920x1080 is 2 megapixels. Video was a fair way behind digital still cameras, which was why the announcement from RED back 2005 was so tantalizing. A typical DSLR has an APS-C sized sensor (or similar).

But look what RED has done now. They have sensors designated S35, FF35, 645 (medium format) and 617 (large format panoramic). I've shown all the sensors sized I've mentioned below for comparison (done in the same scale and style as my previous post on sensor sizes). Yes, that huge rectangle is (roughly, depending on your screen resolution) the sensor dimensions in actual size.

The full-frame FF35 is 24MP. So it's already as good as any professional DSLR and it can shoot video up to 100fps!!

The 645 is 56mm x 42mm and 65MP. The 617 is...wait for it...186mm x 56mm with 261MP. And both these shoot video at 50fps and 25fps respectively.

Finally, the dynamic range on the FF35, 645 and 617 are supposedly 13+ stops. That is incredible (although admittedly the larger pixels sizes of such large sensors make that possible)

Simply mind blowing!

by : Created on Nov. 13, 2008 : Last modified Nov. 13, 2008 : (permalink)

Book Meme

Too slow a tempo is unsuitable to the ornamental melodic motions characteristic of the third species.

from Counterpoint in Composition by Felix Salzer and Carl Schachter

Meme from Greg Newman, Justin Lilly and Brian Rosner:

- Grab the nearest book.

- Open it to page 56.

- Find the fifth sentence.

- Post the text of the sentence in your journal along with these instructions.

- Don't dig for your favorite book, the cool book, or the intellectual one: pick the CLOSEST.

by : Created on Nov. 12, 2008 : Last modified Nov. 12, 2008 : (permalink)

X will cost Y jobs

One type of clause that I have long had an issue with is that of the form

X will create Y jobs

which I most recently read in descriptions of the newly passed proposition for a high-speed rail project in California (which will, supposedly, create 450,000 jobs)

The problem is the assumption that job creation is a benefit. The benefit of the high-speed rail project is presumably supposed to be energy-efficient transportation that will reduce greenhouse gases. I'm all for that. But if you can achieve that with 350,000 jobs that's actually better than taking 450,000 jobs to do it.

Say two start ups are founded and one can build the product with 10 people and another will take 20 people to do the same thing. It seems crazy to say "well the second company is creating 10 extra jobs". No, the second company is wasting 10 jobs. Those people could be doing something more productive (either within the company or somewhere else).

And that gets to the heart of the matter. Creating jobs means taking people away from doing something else.

"What about helping unemployment?" you ask.

What are the chances that the 450,000 people that will work on the California rail project, will all otherwise be unemployed? Pretty slim. Now, to the extent that otherwise unproductive people are made productive (and are paid accordingly) then that is definitely a benefit.

Who knows, maybe all 450,000 people will be doing something more productive than they otherwise would have been doing. But the articles don't say "X will employ Y people more productively", they just say "X will create Y jobs" which, taken as is, is a cost, not a benefit.

I've suggested before that many precepts in economics come down to the concept of opportunity cost. This one is no different.

And, of course, this is all just economics 101. Go read Hazlitt's classic Economics in One Lesson. There's a nice treatment of the "make-work bias" in Bryan Caplan's excellent The Myth of the Rational Voter.

Let me finish with a classic story:

An economist visits China under Mao Ze dong. He sees hundreds of workers building a dam with shovels. He asks: 'Why don't they use a mechanical digger?''That would put people out of work,' replies the foreman. 'Oh,' says the economist, 'I thought you were making a dam. If it's jobs you want, take away their shovels and give them spoons.'

UPDATE: I actually preferred how I explain somethings in a comment below in response to a question so I thought I'd bump up my response into the main body:

whether diverting those 10 people to that startup is a good thing ultimately depends if the start up produces something worth that diversion. It is not prima facie a good thing just because it's using up 10 people. Similarly, the diversion of 450K people to build a high-speed train system may be a good thing (and hopefully will be) but would not be because it diverted 450K people, it would be that despite the fact it diverted 450K away from other things, it was still worth it for the benefit of having the train system.

by : Created on Nov. 12, 2008 : Last modified Nov. 12, 2008 : (permalink)

Storing HTTP_X_FORWARDED_FOR in Django

I occasionally get a

ProgrammingError: value too long for type character(15)

when people post to my blog. There aren't any fields in my model declared to have a max_length of 15 so I was always a little confused and in almost all cases, it was a spammer anyway so never took the time to investigate further.

But then someone just emailed me and told me they were getting a 500 when posting a comment to my blog. So I decided to investigate and that's where it started getting interesting...

Doing

./manage.py sql leonardo

revealed no sign of a field of length 15 either. So I went into the DB (in my case PostgreSQL)'s shell.

A quick

\d leonardo_comment

revealed

author_ipaddress | character(15)

Like many blogs, I capture the IP address of the commenter so I can block spam.

In my model I have:

author_ipaddress = models.IPAddressField(null=True)

Which Django's ORM translates to:

"author_ipaddress" inet NULL

which PostgreSQL is obviously storing as a character(15).

Why would an IP Address be more than 15 characters, though?

Well, I went back to the error log and noticed this:

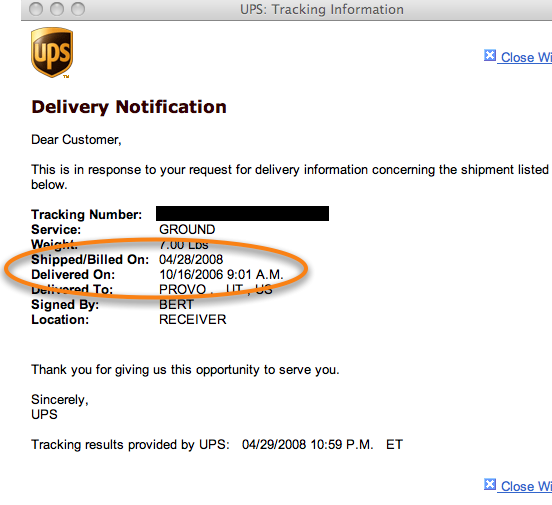

'HTTP_X_FORWARDED_FOR': '192.168.0.127, 12.34.56.78',

(note: I changed the second address to protect the original poster)

You see, because the Apache instance running django is behind another webserver (on the same machine), I can't rely on REMOTE_ADDR because it's always 127.0.0.1. So I log HTTP_X_FORWARDED_FOR.

What I didn't realise until now is that HTTP_X_FORWARDED_FOR can be a list.

I guess the best solution is to just change the field to a CharField.

Other Djangonauts who are logging HTTP_X_FORWARDED_FOR might want to heed this warning: don't use IPAddressField.

by : Created on Nov. 11, 2008 : Last modified Nov. 11, 2008 : (permalink)

Chrome Overtakes IE

I was just looking at the analytics for http://pinaxproject.com/ and noticed the following browser stats for the last month:

Firefox 68.79% Safari 13.84% Chrome 5.88% Internet Explorer 4.31% Opera 3.26%

What stood out to me is that more people have accessed the website using Chrome than IE!

by : Created on Nov. 10, 2008 : Last modified Nov. 10, 2008 : (permalink)

You Need More Than Equipment to be a Cinematographer

With its full frame 1080p video, I've seen a bunch of articles like this one that claim "The Canon 5D Mk II Will Turn Us All Into Professional Cinematographers".

Yes, the 5D Mk II, with a full-frame sensor and EOS lens system is potentially a game changer, but to suggest that we'll all be able to produce results like professional cinematographers is stretching it.

While a good understanding of photography helps with cinematography, it's necessary but not sufficient knowledge.

- composition is important but you also need to know how to move the camera during a shot

- you also need to understand concepts like "crossing the line"

- with the narrow depth of field full-frame affords, you need to be able to pull focus while shooting—that's a whole new skill to learn (and you probably need to use a follow focus)

- you can't rely on shutter speed or aperture to control exposure the same way you do with still photography—you have to learn to rely more on ND filters and lighting

- colour correction becomes even more important as mismatches are more jarring

I hope that the Canon 5D Mk II will encourage people to learn more about the art and craft of cinematography. But the equipment is not the end of the story, it's just the beginning.

by : Created on Nov. 10, 2008 : Last modified Nov. 10, 2008 : (permalink)

Groups, Tribes and Projects in Pinax

From fairly early on, Pinax had tribes and projects. The intention of the distinction was that tribes are a loose grouping of people with a common interest (see Seth Godin's great little book Tribes: We Need You to Lead Us) whereas projects were more focused around managing a group of people working on common tasks.

This distinction was reflected in the differences in implementation: projects are invite only, tribes are open to anyone. Projects have tasks, tribes don't. But there are a lot of similarities: both have threaded discussion, wikis and photo pools. There's also a lot of duplicated code.

It wasn't long before I realised that really projects and tribes we just two subclasses of the same class (or instances of the same metaclass—more on that in a moment). So one of the things we're working on for the next release of Pinax is to merge the two into a single model but then allow a site developer to create as many differently configured subclasses/instances of this model as they like.

We haven't worked out the details yet, but basically it would allow you to define a new subclass/instance of "group", pick the membership model and what apps and object types can be attached to it.

Quite separately, I've noticed that there is another kind of structure that tacitly exists between tribes on Cloud27. I started a Python tribe but others have started Ruby and PHP tribes. There are also geographic tribes and language-based tribes. So there is another sense in which a set of tribes could be labelled as "programming language" tribes, or "geographical" tribes or "natural language" tribes.

So we have:

- the root group type (that will unify projects and tribes)

- types of groups (e.g. projects, tribes, other combinations of features and membership patterns defined by site developers)

- instances of one of these types (e.g. a particular tribe, like 'Python')

- a category of instances (e.g. 'Programming Language Tribes')

To slightly complicate things, one could imagine an "Australian Pythonistas" tribe. Or various intersections of "movies", "food", etc with geography-based tribes (e.g. Italian Food, French Films). These sorts of semantic relationships seems quite orthogonal to defining a type of group that has a wiki but not threaded discussions or is invitation-only versus free-for-all.

I'm trying to wrap my head around which to view as instances versus subclasses. As a longtime data modeler, I thought I had a grasp on this stuff but my brain is hurting :-)

Any suggestions for a metamodel?

by : Created on Nov. 9, 2008 : Last modified Nov. 10, 2008 : (permalink)

From Focus and Directrix to Bézier Curve Parameters

For reasons that will become clear in a couple of posts, I wanted to be able to calculate quadratic Bézier curve parameters from a focus and horizontal directrix.

A focus and directrix are enough to define a parabola (in fact a parabola is the locus of points equidistance from a point, the focus, and a line, the directrix).

A quadratic Bézier curve is a section of a parabola and is defined by three points, according to the formula:

B(t) = (1-t)²P₀ + 2t(1-t)P₁ + t²P₂, t ∈ [0, 1]

Here's how I came to my result...

Given the directrix is horizontal,

- P₁ must have the same x-coordinate as focus

- P₁ must have an x-coordinate midway between P₀ and P₂

and by definition:

- the parabola's low point is midway between the focus and directrix

Now even though somewhat arbitrary and assuming the directrix is below the focus,

- P₀ and P₂ can have a y-coordinate of 0

So,

- the parabola's low point's y-coordinate is midway between P₀y and P₁y

- ⇒ the y-coordinate midway between focus and directrix is midway between P₀y and P₁y

- ⇒ the y-coordinate midway between focus and directrix is half P₁y

Therefore,

- P₀ = (Fx - α, 0)

- P₁ = (Fx, Fy + Dy)

- P₂ = (Fx + α, 0)

for some α where (Fx, Fy) is focus and Dy is y-coordinate of directrix.

By definition,

- P₀ must be equidistant between focus and directrix

- P₂ must be equidistant between focus and directrix

But, given P₀ and P₂ have a y-coordinate of 0 and directrix is horizontal,

- distance between P₀ and focus = Dy

- distance between P₂ and focus = Dy

Therefore,

- (P₀x - Fx)² + (P₀y - Fy)² = Dy²

- ⇒ ((Fx-α) - Fx)² + (0 - Fy)² = Dy²

- ⇒ (-α)² + (-Fy)² = Dy²

- ⇒ α² + Fy² = Dy²

- ⇒ α = √(Dy²-Fy²)

And so, assuming the directrix is horizontal and below the focus, the following Bézier curve parameters can also be used to create the parabola:

- α = √(Dy²-Fy²)

- P₀ = (Fx - α, 0)

- P₁ = (Fx, Fy + Dy)

- P₂ = (Fx + α, 0)

UPDATE (2008-11-29): Now see Voronoi Canvas Tutorial, Part III for the motivation for wanting to do this as well as an implementation in Javascript for drawing parabolae in a canvas element.

by : Created on Nov. 8, 2008 : Last modified Nov. 29, 2008 : (permalink)

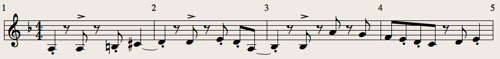

Song Project: LH Piano Riff

Having introduced the right hand of the main piano riff, let's introduce the left hand.

Firstly, here's what it sounds like by itself:

download if embed doesn't work

And together with the right hand:

download if embed doesn't work

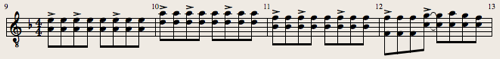

And here's what it looks like in the score:

Now let's analyze it a little. Note that this is post hoc analysis, I didn't go through this thinking when I wrote it (at least not consciously)—it was improvised at the piano—but I find it interesting to go back and see why things worked or generated the particular effect they have.

One thing that immediately stands out is that the 3+3+2 rhythmic grouping found in the right hand is also found here (with one exception we'll come to in a minute). Also, if you look at what note is playing at the start of each of the 3+3+2 grouping: A A C♯ | D D D | B♭ B♭ - | F C E it is always the root of the current chord on the first two beats and either the root, the third or nothing on the final beat.

In the first bar, there is an additional passing note, B (notice it's natural despite the key, because the chord is A) and the C♯ is the leading note into the D chord in the next bar. The slur emphasizes this role.

In the second bar, the E is just a neighbour note between two repetitions of the root D. The final, non-accented A is in the triad of the chord but it's also the leading note of the following B♭ chord and again leading note is slurred.

In the fourth bar, the first E and D are just passing notes taking us from the F root to the C root. The final E is the third of the chord, the 5th of the A chord we return to if we repeat but it's also the leading note of the tonic F (which we'll take advantage of later). It's a great example of a note playing multiple functions both in terms of the current chord and what may follow.

The third bar (which I deliberately left until last to discuss) is a little unusual. If you imagine the second B♭ an octave higher, it's a little easier to see what's going on. In that case, the A and G are just passing notes down to the F in the next bar. But what is a little unusual is firstly that the A and G are not following the 3+3+2 pattern. It is almost as if the pattern has switched to 3+2+3 this bar. Secondly the A is quite dissonant, especially given it's only a semitone away from a note being played in the right hand. This rhythmic change coupled with the dissonance builds a nice tension that is then resolved with what I called a "triumphant" chord in a comment on the previous post.

You may notice a bit of chorusing in the piano sound of the recordings so far. Actually, there's some compression, chorusing and EQ on them, all preempting the mixing that is to follow once we add more instruments. I'll talk about each of these once we've added a few more tracks.

All material for this project is made available under a Creative Commons BY-NC-SA license so you are free to redistribute and remix with attribution but under the same license and not commercially.

by : Created on Nov. 8, 2008 : Last modified Nov. 8, 2008 : (permalink)

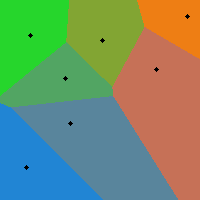

Voronoi Diagrams

Back in Creating Gradients Programmatically in Python I presented some code for writing out PNGs according to some rgb-function of x and y.

The relevant write_png(filename, width, height, rgb_func) is here:

http://jtauber.com/2008/11/png.py

This enables one to quickly generate PNGs based on all sorts of functions of position.

For example, here's a Voronoi diagram:

Take any metric space and pick a discrete number of points in that space we'll designate "control points" (the black dots in the above example). Now colour each other point in the space based on which control point is closest. In other words, two points are the same colour if and only if they have the same "closest control point".

Here's how to generate such a diagram using write_png...

First of all, here's a function that given an (x, y) coordinate and a list of control points, returns a pair of:

- which control point is the closest to (x, y)

- what the distance was

def closest(x, y, control_points): closest = None distance = None for i, pt in enumerate(control_points): px, py = pt d = ((px - x) ** 2 + (py - y) ** 2) if d == 0: return i, 0 if d < distance or not distance: closest = i distance = d return closest, distance

Now we can use this and write_png to generate a PNG Voronoi diagram for a given set of control points: